Known Bots and Crawlers

Viewing Known Bots and Crawlers

HUMAN maintains a list of known bots and crawlers (for example, Google crawlers).

TO see the list of known bots and crawlers:

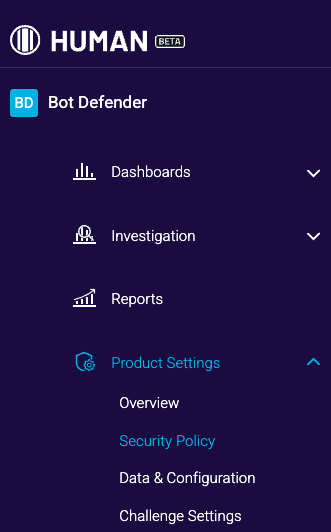

- Go to Product Settings > Security Policy.

- Under Policy rules, select Known bots & Crawlers.

Managing Known Bots and Crawlers

Depending on your organization’s needs, you can apply one of the following policies to any bot or crawler:

- Allow

- All traffic from the selected bot or crawler will pass through.

- All traffic from the selected bot or crawler will pass through. - Deny

- All traffic from the selected bot or crawler will be blocked.

- All traffic from the selected bot or crawler will be blocked. - No policy - Turn off the toggle button

to deactivate the policy. All traffic from this bot or crawler will be treated as traffic from any other source. You can always switch the policy back on.

to deactivate the policy. All traffic from this bot or crawler will be treated as traffic from any other source. You can always switch the policy back on.

The recommended policy is marked with a star ![]()

Bots or crawlers that can be abused by attackers are marked with an exclamation mark ![]() . We don’t advise allowing traffic from these bots or crawlers, unless absolutely necessary.

. We don’t advise allowing traffic from these bots or crawlers, unless absolutely necessary.

What are Abusable Policy Rules

These are policy rules that allow traffic based on the User Agent header only. If these policies are applied to some bots or crawlers, attackers can easily spoof their User Agent to circumvent Bot Defender protections using these bots or crawlers. The way to prevent this is to include a condition category based on an IP.

In short, a policy rule is classified as abusable if it:

- Is an Allow rule

- Is based only the User Agent header only

- Doesn’t contain a condition category based on an IP

Traffic Volume

When the traffic detection on an application is below the detection threshold set by HUMAN, the application’s Volume will be N/A. Once the detection threshold is reached, the application’s Volume is displayed. When no traffic is detected, the Volume is displayed as 0.